What is psychometric property? What are the psychometric properties in research instruments or tests? This article answers these questions. Also, it differentiates reliability and validity in relation to the preparation of research instruments. Further, the article gives tips on how to reduce the items in the questionnaire for greater administration efficiency.

One of the most difficult parts in research writing is when the instrument’s psychometric properties are scrutinized or questioned by your panel of examiners. Psychometric properties may sound new to you, but they are not actually new.

To avoid this unnecessary anxiety, you need to arm yourself with a good knowledge and understanding of psychometric property. This understanding will help you out in developing good research instruments.

The knowledge you gain from reading this article will give you the confidence about this area of the research process.

Psychometric Property Defined

Psychometric property is the aptness of a test or research instrument that seeks to measure human characteristics to understand complex human behavior.

Does the research instrument measure what it intends to measure? Or is it producing spurious data that will cause researchers to make wrong conclusions?

Standardized tests have undergone checks on these properties while for researcher-made questionnaires, these properties need to be satisfied at the very least. Researchers must ensure that their tests adhere to good research practices through pilot-testing and iterative processes that incorporate the essential psychometric properties.

The psychometric properties of a test are broadly defined into two:

- its reliability, and

- its validity.

In simple words, psychometric properties refer to the reliability and validity of the instrument. So, what is the difference between the two?

Reliability refers to the consistency while validity refers to the test results’ accuracy. An instrument should accurately and dependably measure what it ought to measure. Its reliability can help you have a valid assessment; its validity can make you confident in making a prediction.

I’ll explain these two psychometric properties in more detail by describing how the instrument’s reliability and validity are determined and interpreted in the next section.

Two Psychometric Properties in Research Instrument Development

Instrument’s Reliability

How can you say that your instrument is reliable?

Although there are many types of reliability tests, what is more usually looked at is the internal consistency of the test.

When presenting the results of your research, your panel of examiners might look for the results of the Cronbach’s alpha or the Kuder-Richardson Formula 20 computations. If you cannot do the analysis by yourself, you may ask a statistician to help you process and analyze data using a reliable statistical software application such as Gnumeric or the ubiquitous MS Excel.

But if your intention is to determine the inter-correlations of the items in the instrument and if these items measure the same construct, Cronbach’s alpha is suggested.

I describe Cronbach alpha in more detail in the next section.

Cronbach’s Alpha

Cronbach’s alpha measures how closely related the items are in the research instrument—its internal consistency or reliability. Internal consistency refers to how the test items that measure the same construct reliably do so. Constructs are ideas or theories that are not easily observable such as fear, anxiety, or attitude.

If the value of Cronbach’s alpha is high, i.e., 0.75 to more than 0.90, that means that the scale used is reliable (Table 1).

Table 1. Cronbach’s alpha coefficient and interpretation.

| Cronbach’s alpha | Internal Consistency |

| α ≥ 0.90 | Excellent |

| 0.7 ≤ α < 0.9 | Good |

| 0.6 ≤ α < 0.7 | Acceptable |

| 0.5 ≤ α < 0.6 | Poor |

| α < 0.5 | Unacceptable |

Take note, however, that you cannot just accept the value of Cronbach’s alpha blindly. You need to confirm this reliability measure with another test to check whether the scale that you used in the test or survey is unidimensional. Exploratory factor analysis is one such method.

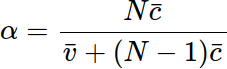

Cronbach’s alpha can be written as a function of the number of test items and the average inter-correlation among the items. Below, for conceptual purposes, we show the formula for the Cronbach’s alpha:

where N is equal to the number of items,

c̄ is the average inter-item covariance among the items, and

v̄ is equal to the average variance.

If you increase the number of test or survey items, Cronbach’s alpha increases. Further, low alpha values result if the correlation of the average test or survey items is low.

To spare yourself from the statistical jargon, the below video tutorial demonstrates the computation of Cronbach’s alpha in MS Excel with examples to illustrate how it’s done.

Thus, as mentioned by the professor in the video, a Cronbach value of 0.75 or higher indicates that you have a respectable and consistent research instrument. The research instrument measures the construct or characteristic that it intends to measure. It is internally consistent.

Instrument’s Validity

There are many types of validity measures. One of the most commonly used is the construct validity. Thus, the construct or the independent variable must be accurately defined.

According to David Kingsbury, a construct is the behavior or outcome a researcher seeks to measure in the study. This is often revealed by the independent variable.

To illustrate, if the independent variable is the school principals’ leadership style, the sub-scales of that construct are the types of leadership style such as authoritative, delegative and participative.

The construct validity would determine if the items being used in the instrument have good validity measures using factor analysis and each sub-scale has a good inter-item correlation using Bivariate Correlation. The items are considered good if the p-value is less than 0.05.

Reducing the Number of Items in the Research Instrument

There are instances that you need to reduce the items in your research instrument greater efficiency in administration. If the items in the instrument that you have prepared are too many, chances are, your respondents would not read it thoroughly and eventually give you a poor result.

Let’s admit that nowadays, respondents don’t like to answer too many questions. For them, it is a waste of time. With this in mind, how can you lessen the number of items in your questionnaire?

Here are your options:

1. Validate the contents of your research instrument.

Aside from measuring the validity of the instrument, content validity can help you decide which items must be deleted, thus reducing the items in your questionnaire. Content validity can be done by the experts in that field that you are trying to investigate.

But who are considered “experts?” These are the people who have doctorate degrees in that field and have practiced their profession for many years. Usually, a minimum of three persons must take a look at your instruments.

However, if your paper is towards fulfilling a master’s degree requirement and there is no one who has a PhD in that area, those who have master’s degree can be taken as experts. If master’s degree holders are still unavailable, the panel of examiners may allow you to avail the help of those who have been practicing their profession for not less than ten years as “experts” in that field.

These people are knowledgeable as to the depth of the contents of the subject area that you want to investigate in and must be adept as to which items should be included to determine a particular variable (construct) and its sub-variable (sub-construct) in your study. They can also tell you which of the items from your questionnaire could be deleted.

2. Do factor analysis for data reduction.

If you choose to do a factor analysis, this will help you determine the construct validity of your instrument and help you decide, with the help of your statistician, which items are to be culled out from your instrument.

3. Have both content and construct validity.

Having the two types of validity, I believe, will make your instrument better.

As a researcher, you are the one to decide or with the help of your research adviser, which items must be deleted using the results of content and construct validity.

Generally, instruments which underwent the content and face validity are much shorter and can give more accurate results than one which did not. Thus, this gives you more confidence in the interpretation of your data.

References

1. Kingsbury, D. (2012). How to validate a research instrument. Retrieved October 16, 2013, from http://www.ehow.com/how_2277596_validate-research-instrument.html

2. Grindstaff, T. (n.d.). The reliability & validity of psychological tests. Retrieved October 16, 2013, from http://www.ehow.com/facts_7282618_reliability-validity-psychological-tests.html

3. Lavrakas, P. J. (2008). Encyclopedia of survey research methods. Sage publications.

4. Renata, R. (2013). The real difference between reliability and validity. http://www.ehow.com/info_8481668_real-difference-between-reliability-validity.html

5. Cronbach’s alpha. Retrieved October 17, 2013, from http://en.wikipedia.org/wiki/Cronbach%27s_alpha

© 2013 October 17 M. G. Alvior, updated 3 December 2021

[cite]

The content of the document is very useful